Machine Learning – Simplified

Anonym

Today's blog is from a guest blogger, Tom Reed from NVIDIA. Some people think graphics cards are just for rendering and visualization, but with a high-end GPU in a laptop and some scripting, a laptop can have the processing power of a super computer! NV5's Custom Solutions Group has developed tools using the NVIDIA CUDA frame work to GPU accelerate key image processing tasks like Orthorectification, Atmospheric Correction, and Image transforms like PCA and ICA. We has also created a library for GPU enabling IDL called AMPE (Advanced Massively-parallel Processing Engine). These tools exist as a licensed technology and provide a huge bang for your buck if you have real time image processing needs (like disaster mapping) and large data volumes to process(such as commercial data production). NVIDIA has kindly provided us with K40Graphics Cards and we’re in the process of re-benchmarking our GPU stats with the new hardware, so stay tuned.

This blog discusses Machine Learning (ML) and Deep Neural Networks (DNNs) which are excellent analysis intensive examples of how GPUs can provide additional processing power.

Machine learning like the other two-word hot topics (Cloud Computing & Big Data) is becoming so broadly interpreted it becomes both meaningless and all encompassing. I’m not here to fix that issue but I do want to put on my pragmatist goggles and share in simple terms why I think Machine Learning (ML)has game changing value to current and future GIS/ISR use cases.

For simplification, let’s quickly focus on a subset of Machine Learning called Deep Neural Networks (DNN). This area of ML is already broadly used in real world applications from web search prediction to understanding that you want lunch and not questionable internet photos when you say “where can I find a good hot dog” into your mobile phone.

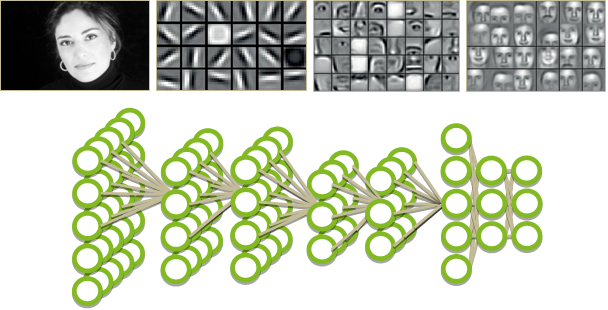

DNN is also widely used to detect and classify objects in imagery. It’s pretty versatile about the kind of input data which we’ll get back to later but let’s stay focused with the imagery theme. Many of you should be thinking, “I already have exquisite ways of detecting and classifying objects in imagery” and you would be right. In this way DNN aka Machine Learning isn’t something new or foreign, it is just a different means to the same end.

So why care about DNN/ML? How is it better? The difference between traditional image processing approaches and the DNN approach is as much about future use and versatility as it is about being a better object detector. The versatility is really important when we want to ask more of our image detection and classification methods. Let’s look at an example. If I want to detect orange dodge balls in every photo or video on the internet I could use a traditional approach that employs a series of pixel based processing algorithms to filter out pixels and enhance others to help find things that “look” like dodge balls. These filters need to be elegantly constructed by really smart software folks, based on what those folks perceive as important features that define what a dodge ball is. This approach is also more or less static. Meaning if I want to find tea pots instead, the software has to be rethought and rewritten. This approach is also susceptible to mistaking pictures of oranges as being orange dodge balls and thus falsely detecting things that “look” like an orange dodge ball. DNN is very different in approaching this problem.

DNN has two steps. The first is a learning step to create a model for correctly identifying dodge balls in images by providing lots of examples containing orange dodge balls and lots of images without dodge balls. And then step two, a classification step, applying that model to find orange dodge balls in any general image or video frame. There’s a fundamental difference between what constitutes a dodge ball in these two approaches.

Using DNN, the model isn’t static or based on human perception. It is highly abstract and constructed by showing the DNN lots of pictures that both contain dodge balls and don’t contain dodge balls and letting the DNN derive what features it can use to most accurately separate correct detections from incorrect. This training process can be lengthy and require lots of computing power but the results very affective. Here’s why a GPU technology guy is telling this story. GPUs are particularly good at the computing tasks DNNs require and hey, creating and manipulating images is why GPUs where invented in the first place, so this seems like a perfect fit.

Now back to the notion that DNNs are like traditional image processing approaches but more versatile. So let’s say I want to not only detect dodge balls but dodge balls hurling towards a human. We could keep extrapolating this query into ever increasing complexity and eventually get to something we can really use to answer a meaningful question. The beauty of DNN is this more complex evolution of query is just a natural progression of the same training/classification process. We just need to find data to train the model with. I might add, without needing human intervention beyond feeding in imagery and helping tell the model how well it classifies things.

DNN doesn’t have to break things down into human understandable features making attributes we can’t see or understand but are good at classifying an object or behavior quite useful. You could just as easily train a DNN with text data, signal data, or some fusion of unstructured sources of data. ML is a natural fit for multi-INT and upstream data fusion. NVIDIA is working with Exelis to figure out where ML can improve analysis capabilities and offer solutions to future challenges. Integrating DNNs into tasks already being done with GPUs seems like a convenient and efficient onramp to exploring machine learning.

I hope this simplistic discussion helps make machine learning less nebulous and more appealing.