Second Reproduction

To increase the visuals that can be shown in this blogpost, we performed a simplified reproduction using NAIP data. Some the differences between this reproduction and the first reproduction are:

-

Only the B737 CAD model that comes with the DIRSIG was used to generate the positive chips

-

The classification only determined if a plane was present in a patch or was not present

-

DIRSIG was used to generate empty chips

-

ENVI’s Deep Learning module was used to train the model and perform the classification

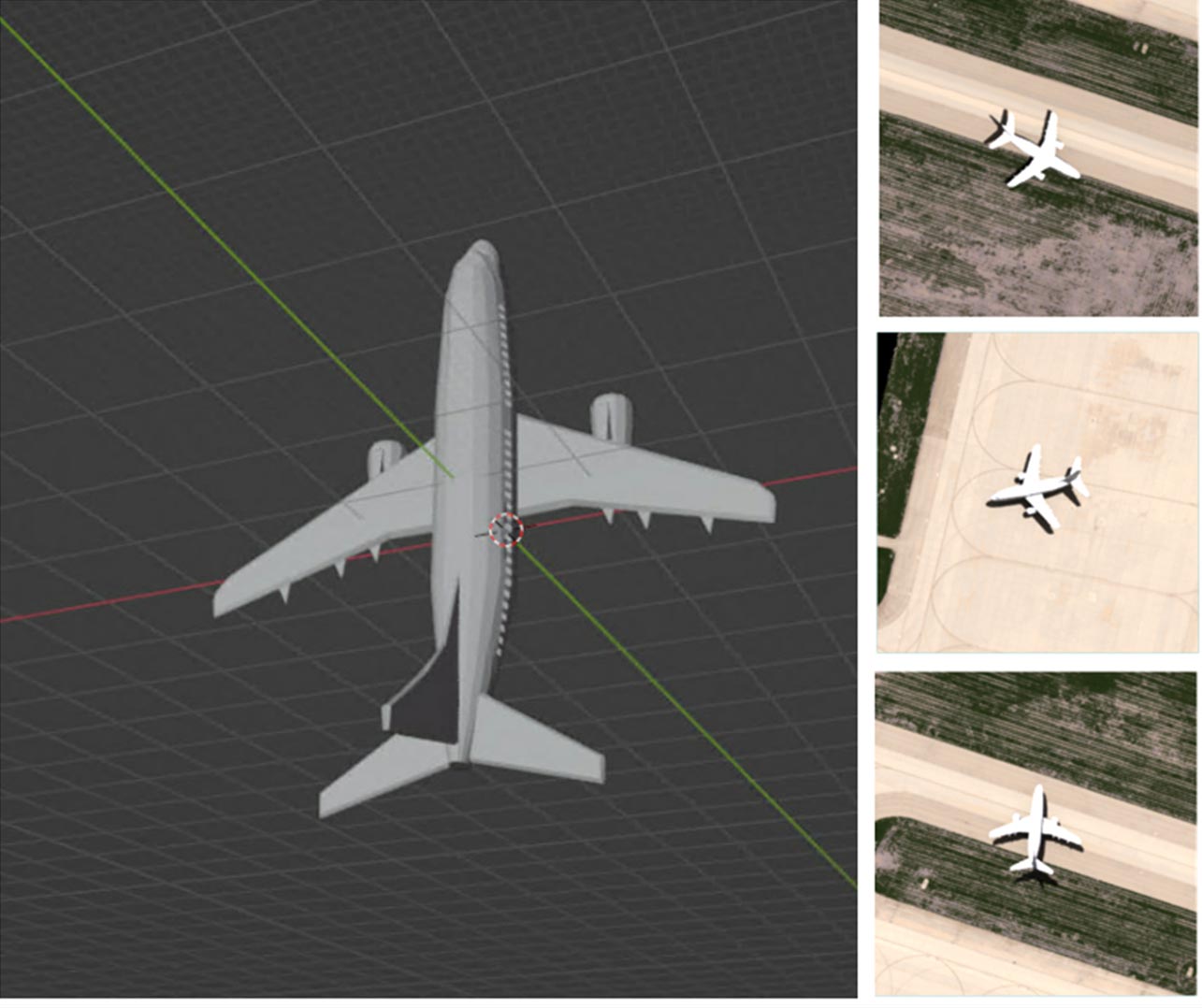

A screenshot of the B737 CAD model as well as a sample of the chips generated by DIRSIG from the model are shown below:

We generated a model using about ~500 positive image chips and ~100 negative image chips to train a “Grid” model using ENVI Deep Learning module. The “Grid” model is a new feature that will be included in the next version of ENVI Deep Learning. The “Grid” will allow users to generate Resnet50 or Resnet101 models. The “Grid” model generates an output grid of positive patches.

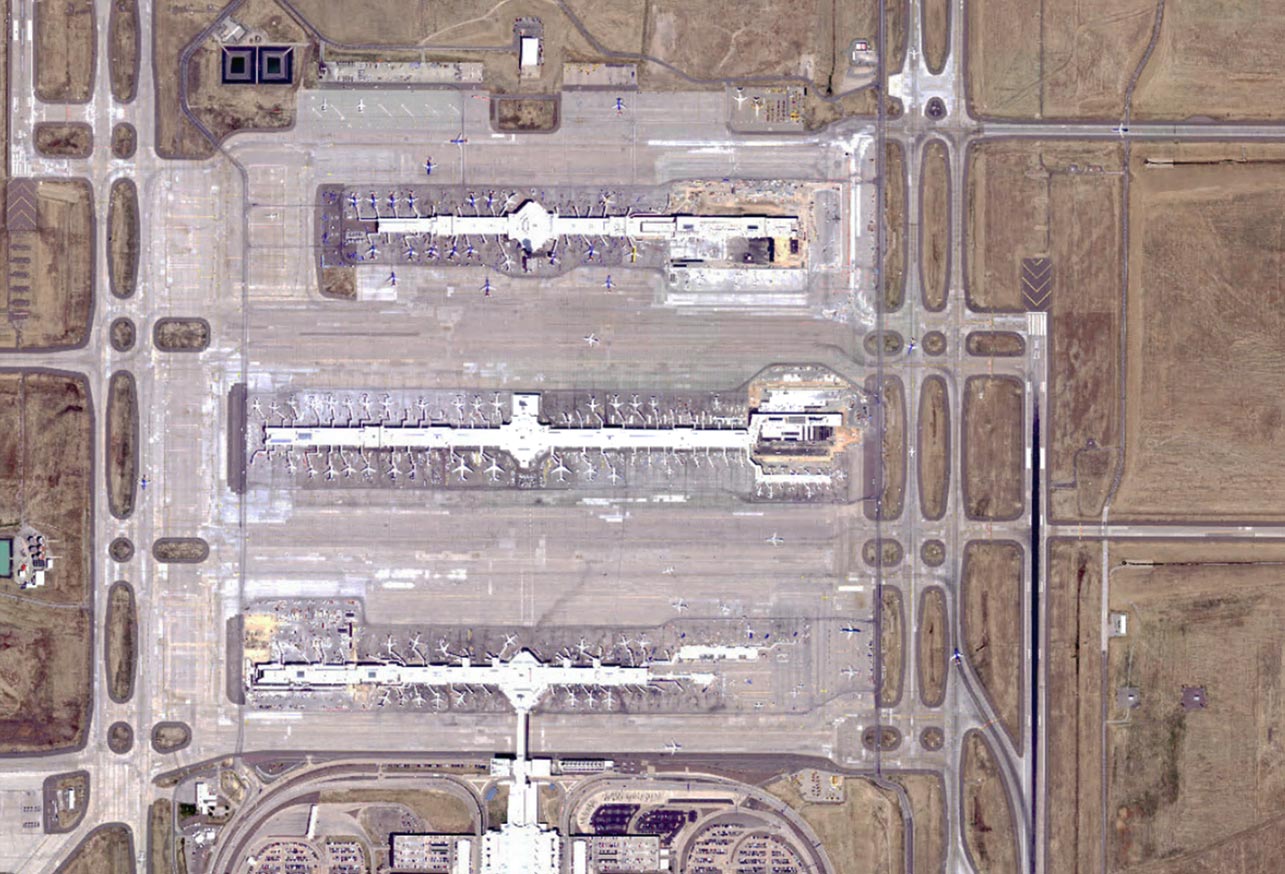

We used a NAIP image of Denver International Airport (DIA) to test the generated model. A sample of the image is shown below:

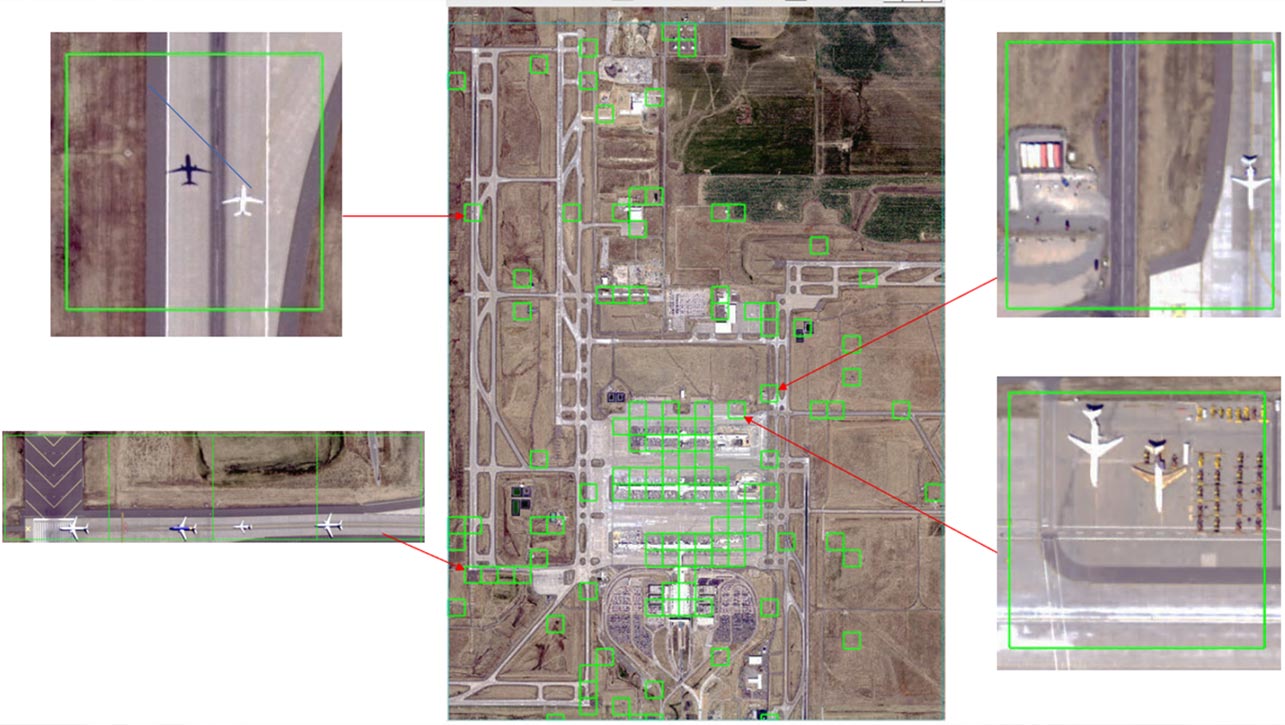

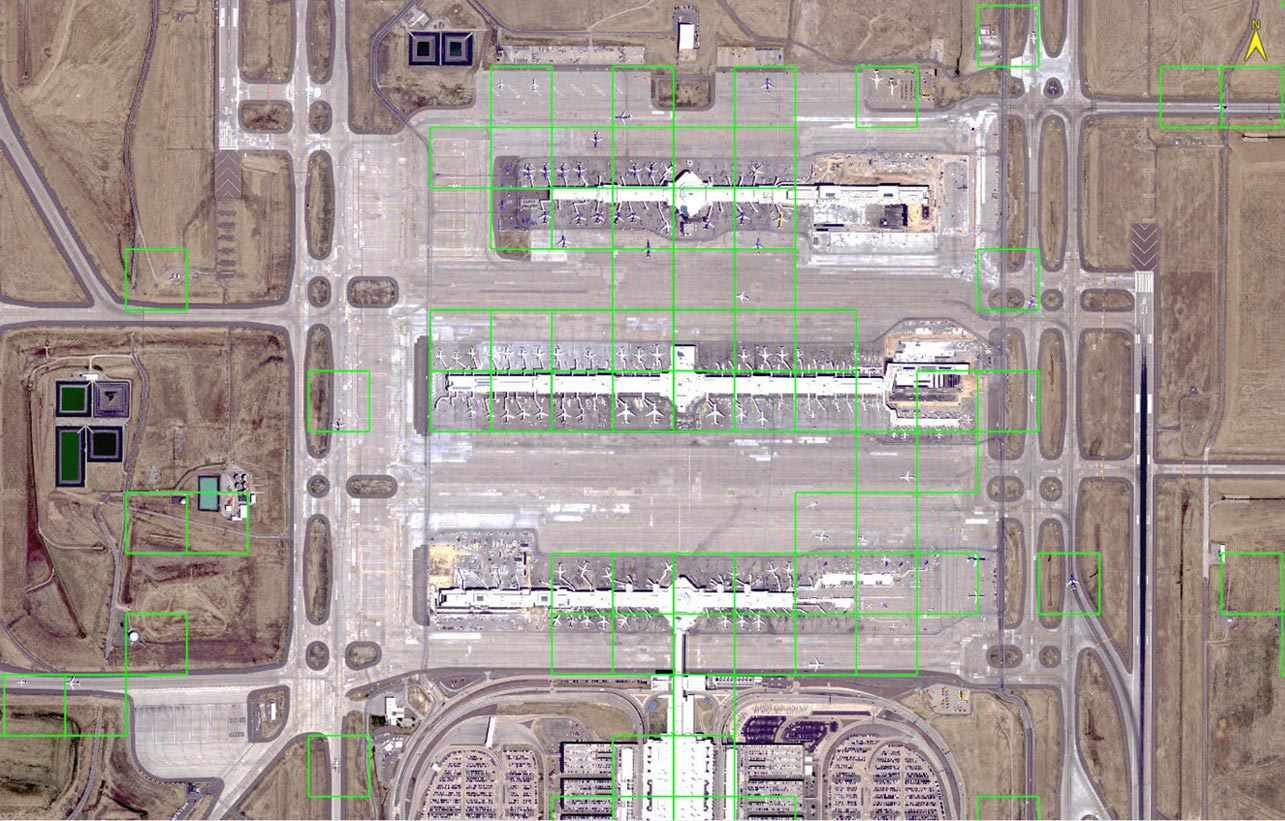

We ran the grid classification on the DIA scene and the output grid is shown below:

We performed a closer examination of a section of the result that was an area of 13x21 patches (273).

In the image below, true positives are highlighted in green, true negatives are highlighted in blue, false positives are highlighted in orange, and false negatives are highlighted in red.

In this section there are 59 true positives, 9 false positives, 1 false negative and 204 true negatives. The accuracy is about 96.3%. This is a very high level of accuracy using a model built with synthetic data on a real image.

The application of using synthetic data to build models has a great deal of value in detecting targets when there simply isn’t sufficient representative training data. Whether you're a researcher, student, or technology enthusiast, the exploration into the world of synthetic remote sensing data promises to be an exciting journey.

Click here to learn more about DIRSIG, and get more detailed information on ENVI Deep Learning here.