Customizing the Geospatial Services Framework with Node.js

Anonym

One of the greatest features of the Geospatial Services Framework (GSF), in my opinion, is the fact that it is built upon Node.js. There are many reasons why this is great, but I wanted to talk about one in particular which is the amount of customization this provides.

Below I will show a simple but powerful example of this customization, but before I get there, I want to give a quick overview of both GSF and Node.js.

Node.js is a JavaScript runtime built on Chrome’s V8 JavaScript engine. It uses an event-driven, non-blocking I/O model that makes it lightweight and efficient. With that said, what is important about Node.js in the context of this blog post is that it is a powerful, scalable backend for a web application that is written in the same language almost every website uses, JavaScript.

We have improved ENVI Service Engine by adding GSF – a lightweight, but powerful framework based on Node.js that can provide scalable geospatial intelligence for any size organization. I like to describe it as a “messenger” system that provides a way to communicate between the web and our various products or “engines” that provide analytics such as ENVI, IDL and more.

GSF by design has its Node.js code exposed. This allows the product to be customized infinitely to fit whatever architecture that it needs to reside in. It is a modular system, and has different modules that can be toggled on/off or even duplicated and extended upon. This makes customization easy and safe.

One of these modules is called a Request Handler. This module serves up endpoints for GSF. This can be simply REST based calls, a webpage or even better, both.

While developing an ENVI web client demo that takes Amazon S3 hosted raster data and passes it to GSF to run analytics on, I found that I didn’t have a way to simply list what data is available in my S3 storage. While exploring ways to solve this problem I came to a realization that I can simply use the power of Node.js to accomplish this task.

After importing the aws-sdk package that is already installed with GSF into my request handler, I just wrote a simple function to use that package to list any .dat files in my S3 storage data and return that information to my front end web application to be ingested and displayed.

Here is the slightly modified request handler code with comments explaining each part.

//Import express, used by node.js to setup rest endpoints

var express = require('express');

//Import AWS, used to interact with Amazon Web Services including S3 storage

var aws = require('aws-sdk');

//Extra tools that should be included in request handlers

var extend = require('util')._extend;

var defaultConfig = require('./config.json');

/**

* Dynamic Request Handler

*/

function DynUiHandler() {

var s3Bucket, s3;

//Setup config options

var config = {};

extend(config, defaultConfig);

//Grab information for S3 from config.json

s3Workspace = config.S3Root;

s3Bucket = config.S3Bucket;

// Setup S3

s3 = new aws.S3(config);

/**

* List files in the s3 bucket.

* @param {object} req - The Express request object.

* @param {object} res - The Express response object.

*/

function listS3Data(req, res) {

//Take the parameter passed from the REST call and set that as the bucket to be accessed in the call to s3.

var params = {

Bucket: req.params.bucket

};

//Call the S3 package's built in listObjects function to return what is avaiable in the specified bucket

s3.listObjects(params, function(err, data) {

//check if error occured and if so, halt and report it to client.

if (err) {

var code = err.code || 500;

var message = err.message || err;

res.status(code).send({

error: message

});

}

// If no error, push every object found in the response with a '.dat' extension to an array that will be returned to client

else {

var files = [];

//Look at each file contained in data returned by s3.listObjects()

data.Contents.forEach(function(file) {

//Searches for files with .dat in the bucket requested

if(file.Key.endsWith('.dat')){

//If found, store that file information in the files array.

files.push(file);

}

});

}

//send the files array containing metadata of all .dat files found.

res.send(files);

});

};

//Initialize request handler, run when GSF starts up.

this.init = function(app) {

// Set up request handler to host the html subdirectory as a webpage.

app.use('/dynui/', require('express').static(__dirname + '/html/'));

// Set up a rest call that runs listS3Data and supplies the accomponying bucket parameter.

app.get('/s3data/:bucket', listS3Data);

};

}

//

module.exports = DynUiHandler;

After restarting the server I was able to hit my rest endpoint by pasting the following in my browser:

http://localhost:9191/s3data/mybucket

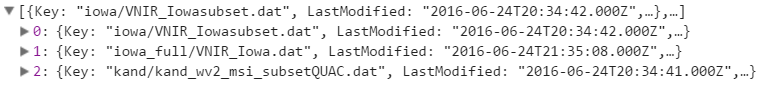

This would return me JSON of the contents of “mybucket”

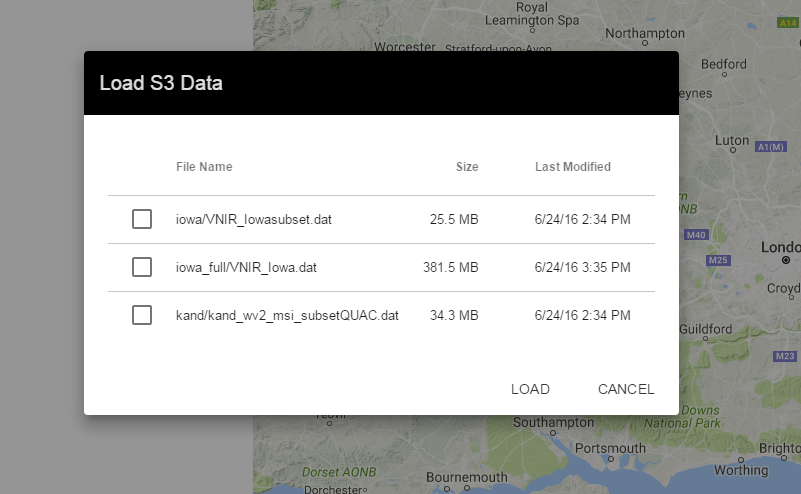

Using this information I was able to give a user of my application real time information right inside of the interface about which data they have available to them.

This is a simple example of how I customized a stock request handler to make my web demo more powerful. However, the ability to modify the source code of such a powerful system in a modular fashion provides a safe way to mold GSF into the exact form and shape you need to fit into your own architecture.

It might just be me but I much prefer that over restructuring my own existing architecture to fit closed source code I know nothing about.