Using Deep Learning for Feature Extraction

Anonym

In August, I talked about how to pull features out of images using known spatial properties about an object. Specifically, in that post, I used rule-based feature extraction to pull stoplights out of an image.

Today, I’d like to look in to a new way of doing feature extraction using deep learning technology. With our deep learning tools developed here in house, we can use examples of target data in order to find other similar objects in other images.

In order to train the system, we will need 3 different kinds of examples for the deep learning network to learn what to look for. This will be target, non-target, and confusers. These examples are patches cut out of similar images, and the patches will all be the same size. In my case for this exercise, I've picked a size of 50 by 50 pixels.

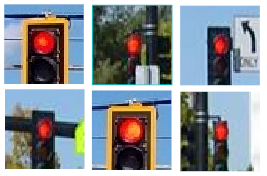

The first patch type is actual target data – I’ll be looking for illuminated traffic lights. For the model to work well, we’ll need different kinds of traffic signals, lightning conditions, and camera angles. This will help the network generalize what the object looks like.

Next, we’ll need negative data, or data that does not contain the object. This will be the areas surrounding the target, and other features that will possibly appear in the background of the image. In our case for traffic lights, this will include cars, streetlights, road signs, foliage, and others.

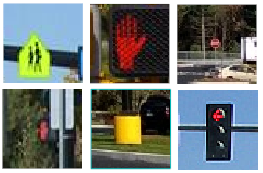

For the final patch type, I went through some images and marked things that may confuse the system. These are called confusers, and will be objects with a similar size, color, and/or shape of the target. In our case, this could be other signals like red arrows or a “don’t walk” hand. I’ve also included some bright road signs and a distant stop sign.

Once we have all of these patches, we can use our machine learning tool known as MEGA to train a neural network that can be used to identify similar objects in other images.

Do note that I have many more patches created than just the ones displayed. With more examples, and more diverse examples, MEGA has a better chance of accurately classifying target vs non-target in an image.

In our case here, we’ll only have three possible outcomes as we look though the image. This will be lights, not lights, and looks-like-a-light classes. If you have many different objects in your scene, you can even get something more like a classification image, as MEGA can be used to identify as many objects in an image as you like. If we wanted to extend this idea here, we could look for red lights, green lights, street lights, lane markers, or other cars. (This is a simple example of how deep learning would be used in autonomous cars!)

To learn more about MEGA and what it can do in your analysis stack, contact our Custom Solutions Group for more details! For my next post, we’ll look at the output from the trained neural network, and analyze the results.