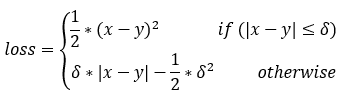

In machine learning, a loss function is a mathematical function that must be minimized in order to achieve convergence. Choosing the proper loss function is an important step in designing your neural network. The IDLmllfHuber (Huber) loss function is implemented with the following formula:

where x is the calculated output of the model and y is the predicted output or truth.

Example

Compile_opt idl2

LossFunction = IDLmllfHuber(0.5)

Print, LossFunction(Findgen(10)/9.0, Fltarr(10))

Typically, you will pass an object of this class to a neural network model definition:

Classifier = IDLmlFeedForwardNeuralNetwork([3, 7, 1],

LOSS_FUNCTION=IDLmllfHuber(0.25)

Syntax

Kernel = IDLmllfHuber(Delta)

Arguments

Delta

Specify a threshold value that determines how small the error has to be to make it quadratic.

Keywords

None

Version History

See Also

IDLmllfCrossEntropy, IDLmllfLogCosh, IDLmllfMeanAbsoluteError, IDLmllfMeanSquaredError