Pixel segmentation is the process of highlighting pixels in an image that represent features you are interested in, then assigning those pixels a class label. The following figure shows an example of pixel segmentation using regions of interest (ROIs) to label different types of aircraft:

Pixel segmentation goes beyond simply locating objects. It also provides information about the shape, area, and other object attributes.

Process Overview

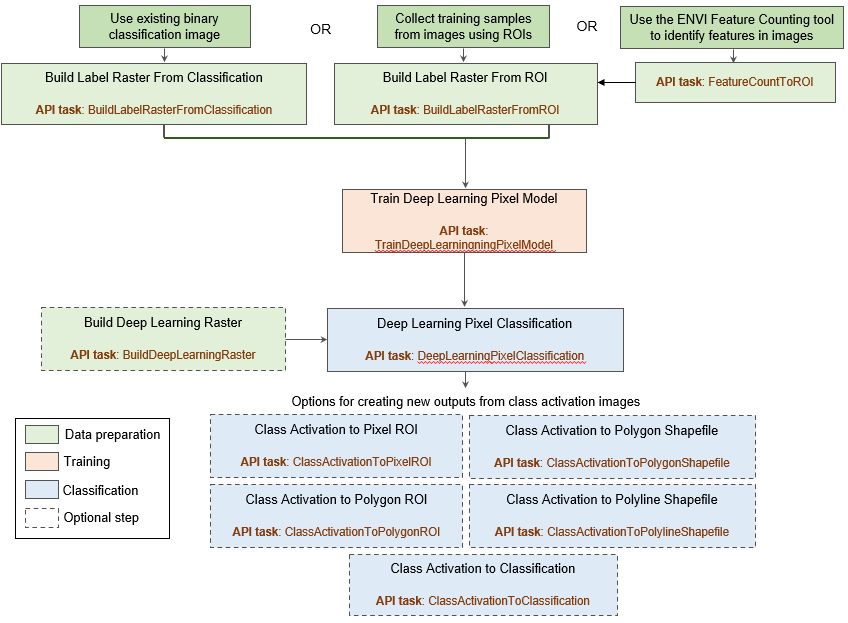

The pixel segmentation process in ENVI involves several steps. First, the model must be trained to look for specific features using a set of input label rasters that indicate known samples of the features. See the Label Features topic for more information about creating label rasters.

After a deep learning model learns what a specific feature looks like from the label rasters, it can look for similar features in other images by classifying them using the trained model. For example, you may want to find the same features in a much larger version of the image than the model was trained on, or even different images with similar spatial and spectral properties. The end result is a classification raster of the various features. If you are only interested in one particular feature, the result is a binary classification raster with values of 0 (background) and 1 (feature). If you are extracting multiple features, the pixel values of the classification raster range from 0 (background) to the number of classes.

A secondary output of the classification step is a class activation raster, which is a grayscale image, one band per feature, that shows the probability of pixels belonging to the features of interest.

The initial classification raster and class activation rasters may not be entirely accurate, depending on the quality of the input training samples. An optional step would be to refine the label rasters by creating ROIs of the highest pixel values, then editing the ROIs to remove false positives. The refined ROIs can be combined with the original ROIs to either train a new model again or to refine the trained model.

The following flowchart shows the steps of the pixel segmentation process:

See the following topics for more information on each step:

Reference: Deskevich, Michael P., Robert A. Simon, Christopher R. Lees. Image processing system including training a model based upon iterative blurring of geospatial images and related methods. Harris Geospatial Solutions, Inc. assignee; now owned by NV5 Global, Inc. U.S. Patent No.10984507 (issued April 20, 2021)

See Also

Overview of ENVI Deep Learning

, Label Features, Pixel Segmentation and Feature Labeling Tips