Deep Learning Optimized Object Classification

The Deep Learning Optimized Object Classification tool uses a trained ENVI Deep Learning object detection model to perform inference on a raster in regions that contain features of interest as identified by a trained ENVI Deep Learning grid model (patent pending). Run this tool as many times as needed to classify multiple rasters. The output is an object shapefile of bounding boxes for each class, and a grid shapefile of areas containing objects detected.

You can also write a script to classify a raster using the DeepLearningOptimizedObjectClassification task.

Follow these steps:

-

In the ENVI Toolbox, select Deep Learning > Grid > Deep Learning Optimized Object Classification. The Deep Learning Optimized Object Classification dialog appears with the Main tab selected.

- In the Input Raster field, select a raster to classify. It must contain at least as many bands as the raster that was used to train the model.

- In the Input Object Model field, select an ONNX file (.envi.onnx) to use for object detection classification in the grid-detected cells.

- In the Input Grid Model field, select an ONNX file (.envi.onnx) that was designed for grid-based analysis to classify the input raster.

- For the Confidence Threshold, use the slider bar or up/down arrow buttons to specify a threshold value between 0 and 1.0. Bounding boxes with a confidence score less than this value will be discarded. The default value is 0.2. Decreasing this value generally results in more classification bounding boxes throughout the scene. Increasing it results in fewer classification bounding boxes.

- For the Grid Confidence Threshold, use the slider bar or up/down arrow buttons to specify a threshold value between 0 and 1.0. Bounding boxes with a confidence score less than this value will be discarded before applying the Intersection Over Union Threshold value. The default value is 0.2. Decreasing this value generally results in more classification bounding boxes throughout the scene. Increasing it results in fewer classification bounding boxes.

- For the Intersection Over Union Threshold, use the slider bar or up/down arrow buttons to specify a value from 0 to 1.0 indicating the Non-Maximum Suppression Intersection over Union (NMS IOU) value. This is a Deep Learning object detection parameter that reduces detection clustering by pruning bounding boxes that have high IOU with previously selected boxes. The default value is 0.5. Increasing this value results in more overlapping bounding boxes around features. Decreasing the value results in fewer overlapping bounding boxes.

-

For Enhance Display, select Yes if you want to apply an additional small stretch to the processed data to suppress noise and enhance feature visibility. The optional stretch is effective for improving visual clarity in imagery acquired from aerial platforms or sensors with higher noise profiles.

-

For Visual RGB, select Yes if you want to encode the output raster as a three-band RGB composite (red, green, blue) for color image processing. This ensures consistent band selection from ENVI display types (such as RGB, CIR, and pan) and supports integration of diverse data sources (such as MSI, panchromatic, and VNIR) without band mismatch.

- In the Output Object Vector field, select a path and filename for the output shapefile (.shp). The shapefile contains bounding boxes for each class.

- Enable the Display result check box to display the output in the view when processing is complete.

- In the Output Grid Vector field, select a path and filename for the output shapefile (.shp). The shapefile contains bounding boxes for each class.

- To set advanced parameters for processing, select the Advanced tab.

-

From the Processing Runtime field, select one of the following execution environments for the classification task:

- CUDA: (Default) Uses NVIDIA GPU acceleration for optimal performance and faster processing

- CPU: Ensures compatibility on systems without GPU support, but with reduced processing speeds.

-

Use the CUDA Device ID parameter to explicitly control GPU selection in multi-GPU environments when you choose CUDA as the Processing Runtime. Optionally enter a target GPU device ID. If you provide a valid ID, the classification task will execute on the specified CUDA-enabled GPU. If the ID is omitted or invalid, the system defaults to GPU device 0.

- Enable the Display result check box to display the output in the view when processing is complete.

-

To reuse these task settings in future ENVI sessions, save them to a file. Click the down arrow  next to the OK button and select Save Parameter Values, then specify the location and filename to save to. Note that some parameter types, such as rasters, vectors, and ROIs, will not be saved with the file. To apply the saved task settings, click the down arrow

next to the OK button and select Save Parameter Values, then specify the location and filename to save to. Note that some parameter types, such as rasters, vectors, and ROIs, will not be saved with the file. To apply the saved task settings, click the down arrow  and select Restore Parameter Values, then select the file where you previously stored your settings.

and select Restore Parameter Values, then select the file where you previously stored your settings.

-

To run the process in the background, click the down arrow  and select Run Task in the Background. If an ENVI Server has been set up on the network, the Run Task on remote ENVI Server name is also available. The ENVI Server Job Console will show the progress of the job and will provide a link to display the result when processing is complete. See the ENVI Servers topic in ENVI Help for more information.

and select Run Task in the Background. If an ENVI Server has been set up on the network, the Run Task on remote ENVI Server name is also available. The ENVI Server Job Console will show the progress of the job and will provide a link to display the result when processing is complete. See the ENVI Servers topic in ENVI Help for more information.

- Click OK.

Evaluate the Results

Display the output grid, and object detection shapefiles over the raster that was classified. This should result in multiple large green boxes (grids) surrounding smaller colored boxes (objects). The example image below is based on the data used in the object detection tutorial. The same training data was used in Train Deep Learning Grid Model to generate the grid model. The image below (ImageToClassify.dat) and the resulting vectors were created using the grid model and the object detection tutorial model (ObjectDetectionModel_HandicapSpots.envi.onnx).

Follow these steps to overlay the output classification vectors over the Input Raster:

-

Display the Input Raster in the current view.

-

In the Layer Manager, click and drag the Input Raster below the vector classification outputs.

You can also view confidence values for each area of interest identified by the green boxes. Additionally, you can view confidence values of the object detection model. This may help in determining whether your confidence threshold was set too high or too low, and help assess the accuracy of your model.

-

In the Layer Manager right click the grid classification vector and select Filter Records by Attribute.

-

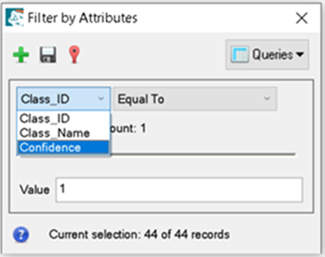

In the Filter by Attributes dialog, select the Class_ID drop-down list, then select Confidence.

-

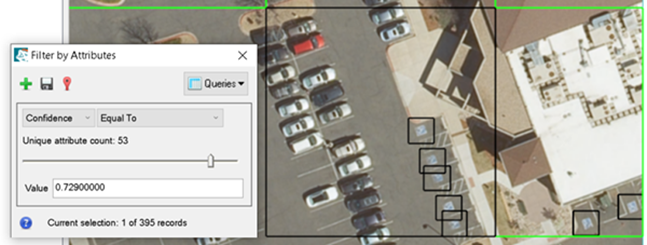

Setting the confidence Value to 0.729 with the grid vector selected changes the green box to black. This provides feedback that this grid cell contains features of interest with a 0.729 confidence value.

-

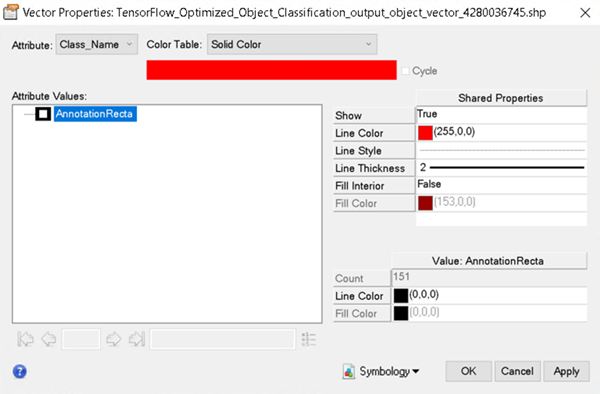

To see the object's confidence per grid cell, you need to change the line color of the objects detected (for example, from black to blue). In the Layer Manager, select the object vector, right click, and select properties from the menu to open the Vector Properties dialog.

-

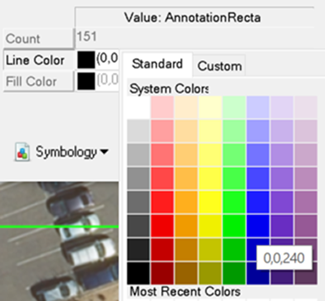

In the bottom right of the dialog, select the black box next to Line Color and select Blue. Click Apply, then OK. All object boxes should now be blue.

-

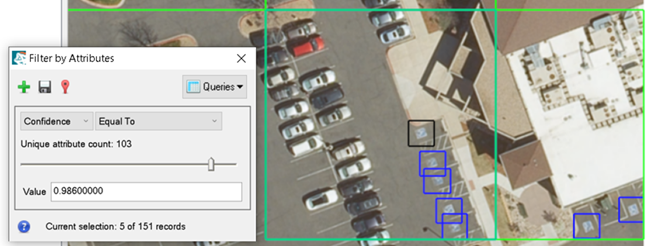

In the Layer Manager right click the object classification vector and select Filter Records by Attribute. Click Confidence and move the slider to a value of 0.986 with the object vector selected. This changes the top-most handicap spot from blue to black in the following example:

See Also

Train Deep Learning Object Models, Train Deep Learning Grid Models, Deep Learning Grid Classification