Older graphics cards and monitors have been able to display a maximum color depth of 8-bits per channel. In such cases high-precision images that exceed 8-bits per channel must be converted to 8-bit for display. This was accomplished using the IDL BYTSCL function which was limited by the processing capabilities of the CPU. This conversion can now be accomplished by the GPU. The full precision image is passed to the video card memory once and is then converted as it is rendered.

For more information and example code, see Examples of Handling High Precision Images.

OpenGL Conversion of Image Data to Texture Data

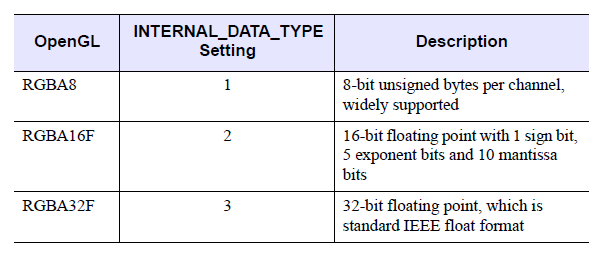

It is important to understand how OpenGL converts a high precision image to a texture map before writing a shader program. The graphics card vendor ultimately decides what formats are supported. Using the IDLgrImage INTERNAL_DATA_TYPE property, you tell OpenGL in what format you would like the texture stored. The following table describes the relationship between OpenGL types and the INTERNAL_DATA_TYPE property value.

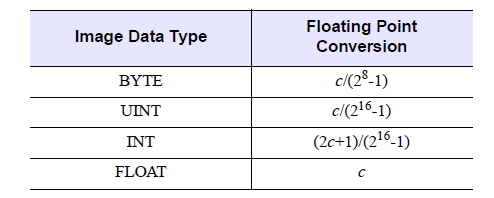

An IDLgrImage accepts data of type BYTE, UINT, INT and FLOAT. When you create the texture map, the data from IDLgrImage is converted to the type specified in INTERNAL_DATA_TYPE.

Note: If your image data is floating point, your fragment shader must scale it to the range 0.0 to 1.0 before writing it to gl_FragColor or you need to scale it to the range of 0.0 to 1.0 before setting it on the IDlgrImage.

If INTERNAL_DATA_TYPE is set to floating point (INTERNAL_DATA_TYPE equals 2 or 3), image data conversion is performed by OpenGL as follows where c is the color component being converted:

If INTERNAL_DATA_TYPE is 1 (8-bit unsigned byte), then the image data is scaled to unsigned byte. This is equivalent to a linear BYTSCL from the entire type range (e.g. 0 - 65535) to unsigned byte (0 - 255).

Note

Note: INTERNAL_DATA_TYPE of 0, the default, maintains backwards compatibility by converting the image data to byte without scaling.

To avoid data loss during conversion, choose an internal data type with sufficient precision to hold your image data. For example, with a 16-bit UINT image that uses the full range of 0 - 65535, if you set INTERNAL_DATA_TYPE to 2 (16-bit floating point), your image will still be converted to the range of 0.0 to 1.0, but some precision will be lost (due to the mantissa of a 16-bit float being only 10 bits). If you need a higher level of precision, set INTERNAL_DATA_TYPE to 3 (32-bit floating point). However, on some cards there may be a performance penalty associated with the higher level of precision, and requesting 32-bit floating point will certainly require more memory.

Once the image has been converted to a texture map it can be sampled by the shader. The GLSL procedure, texture2D, returns the sampled texel in floating point (0.0 to 1.0). Therefore, if the INTERNAL_DATA_TYPE is 1 (unsigned byte) the texel is converted to floating point, using c/(28 - 1), before being returned.