The following examples provide guidelines for reading in various types of image data including how to set the IDLgrImage INTERNAL_DATA_TYPE property and supporting fragment shader code. However, due to the size limitations of the IDL distribution, high-precision images are not included, so you will need to use your own data to create working examples. See the following sections:

Displaying a 16-bit UINT Image

In this example, the input image (uiImageData) is 16-bit unsigned integer greyscale image that uses the full range of 0 to 65535. The goal is to display the entire range using a linear byte scale. Traditionally we'd use the BYTSCL function in IDL prior to loading data into the IDLgrImage object:

ScaledImData = BYTSCL(uiImageData, MIN=0, MAX=65535)

oImage = OBJ_NEW('IDLgrImage', ScaledImData, /GREYSCALE)

To have the GPU do the scaling, load the unscaled image data into the IDLgrImage and set INTERNAL_DATA_TYPE to 3 (32-bit floating point):

oImage = OBJ_NEW('IDLgrImage', uiImageData, $

INTERNAL_DATA_TYPE=3, /GREYSCALE, SHADER=oShader)

The fragment shader is extremely simple. Here, the reserved uniform variable, _IDL_ImageTexture, represents the base image in IDL:

uniform sampler2D _IDL_ImageTexture

void main(void)

{

gl_FragColor = texture2D(_IDL_ImageTexture, gl_TexCoord[0].xy)

}

All we are doing is reading the texel with texture2D and setting it in gl_FragColor. You will notice that there is no explicit conversion to byte because this is handled by OpenGL. The value written into gl_FragColor is a GLSL type vec4 (4 floating point values, RGBA). OpenGL clamps each floating point value to the range 0.0 to 1.0 and converts it to unsigned byte where 0.0 maps to 0 and 1.0 maps to 255. So all we have to do is read the texel value from _IDL_ImageTexture and set it into gl_FragColor.

Displaying an 11-bit UINT Image

An 11-bit unsigned integer image is usually stored in a 16-bit UINT array, but with only 2048 (211) values used. For this example, the minimum value is 0 and the max is 2047. Traditionally this would be converted to byte as follows:

ScaledImData = BYTSCL(uiImageData, MIN=0, MAX=2047)

oImage = OBJ_NEW('IDLgrImage', ScaledImData, /GREYSCALE)

To scale on the GPU we again load the image with the original data. This time INTERNAL_DATA_TYPE can be set to 2 (16-bit float) as this can hold 11-bit unsigned integer data without loss of precision:

oImage = OBJ_NEW('IDLgrImage', uiImageData, $

INTERNAL_DATA_TYPE=2, /GREYSCALE, SHADER=oShader)

The fragment shader looks like the following where _IDL_ImageTexture represents the base image in IDL:

uniform sampler2D _IDL_ImageTexture

void main(void)

{

gl_FragColor = texture2D(_IDL_ImageTexture, $

gl_TexCoord[0].xy) *(65535.0 / 2047.0)

}

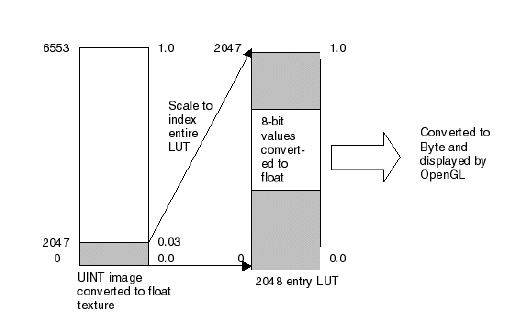

The only difference between this 11-bit example and the previous 16-bit example is the scaling of each texel. When the 16-bit UINT image is converted to floating point, the equation c/(216 - 1) is used so 65535 maps to 1.0. However, the maximum value in the 11-bit image is 2047, which is 0.031235 when converted to floating point. This needs scaled to 1.0 before being assigned to gl_FragColor if we want 2047 (image maximum) to map to 255 (maximum intensity) when the byte conversion is done. (Remember a value of 1.0 in gl_FragColor is mapped to 255.)

It's possible to implement the full byte scale functionality on the GPU, and let the user interactively specify the input min/max range by passing them as uniform variables. There is a performance advantage to doing this on the GPU as the image data only needs to be loaded once and the byte scale parameters are changed by modifying uniform variables. See IDLgrShaderBytscl for more information.

Displaying an 11-bit UINT Image with Contrast Adjustment

The previous example applied a linear scaling to the 11-bit data to convert it to 8-bit for display purposes. Sometimes it is useful to apply a non-linear function when converting to 8-bit to perform contrast adjustments to compensate for the non-linear response of the display device (monitor, LCD, projector, etc.).

For an 11-bit image this can be achieved using a LUT with 2048 entries where each entry contains an 8-bit value. This is sometimes referred to as an 11-bit in, 8-bit out LUT, which uses an 11-bit value to index the LUT and returns an 8-bit value.

This is relatively simple to implement on the GPU. First create the 2048 entry contrast enhancement LUT and load it into an IDLgrImage which will be passed to the shader as a texture map (see Applying Lookup Tables Using Shaders for more information).

x = 2*!PI/256 * FINDGEN(256)

lut = BYTE(BINDGEN(256) - SIN(x)*30)

lut = CONGRID(lut, 2048)

oLUT = OBJ_NEW('IDLgrImage', lut, /IMAGE_1D, /GREYSCALE)

oShader->SetUniformVariable, 'lut', oLUT

The image is created as before:

oImage = OBJ_NEW('IDLgrImage', uiImageData, $

INTERNAL_DATA_TYPE=2, /GREYSCALE, SHADER=oShader)

The fragment shader looks like the following where _IDL_ImageTexture represents the base image in IDL and lut is the lookup table.:

uniform sampler2D _IDL_ImageTexture

uniform sampler1D lut

void main(void)

{

float i = texture2D(_IDL_ImageTexture, $

gl_TexCoord[0].xy).r * (65535.0/2048.0)

gl_FragColor = texture1D(lut, i)

}

The texel value is scaled before being used as an index into the LUT. The following figure shows how the 11-bit to 8-bit LUT is indexed. Only a fraction of the input data range is used (0 - 2047 out of a possible 0 - 65535). As 2047 (0.0312 when converted to float) is the maximum value, this should index to the top entry in the LUT. So we need to scale it to 1.0 by multiplying by 32.015. Now the range of values in the image (0 - 2047) index the entire range of entries in the LUT.

Although this could be done on the CPU, it is much more efficient to do it on the GPU since the image data only needs to be loaded once and the display compensation curve can be modified by changing data in the IDLgrImage holding the LUT.